Chapter 7

21 minutes

B2B SEO: A/B Testing for Search

Emily Friedlander Marketing and SEO Accounts at Rankscience

SEO is a powerful acquisition channel, and it’s something every company can optimize regardless of the size of their marketing budget. But it can also be difficult to attribute traffic results to specific changes you make to your website, especially when you compare SEO to a channel like paid search ads, where measurement is straightforward, it’s easy to test variants, and there’s a clear basis for decisions about further investments.

One way to bring that quantitative rigor to your organic search efforts is to do SEO A/B testing.

Most marketers are familiar with A/B testing different versions of a webpage for conversion rate optimization (CRO) to increase signups once a user is already on the site. But the goal of SEO split testing is to get more people to click on your link in Google’s search results and visit your site in the first place.

With this method, you have data on your side as you try to drive more traffic to your site. You can justify larger investments when a website tweak works, and spot disasters before they smash your ranking.

Many B2B SaaS companies haven’t tried this technique. It’s still a somewhat advanced practice at a handful of analytically rigorous companies. This chapter aims to change that with tips and tactics you can start using today.

The rise of A/B Testing for SEO

Consumer-focused companies have typically been ahead of the curve when it comes to search engine optimization, including SEO split testing. The practice was pulled further into the spotlight by Pinterest when growth engineer Julie Ahn shared a famous post on their successful SEO A/B testing framework. According to Julie, SEO is one of Pinterest’s biggest growth drivers, but in the past it had been difficult to manipulate it for predictable growth.

“You might have a good traffic day or a bad traffic day and not know what really triggered it,” she writes, “which often makes people think of SEO as magic rather than engineering.”

So Pinterest created an A/B testing framework to turn that magic into a “deterministic science.” This testing unearthed important clues about what users wanted. For example, one SEO test involved putting more text descriptions alongside images, and the positive result led to a series of follow-up experiments that resulted in a nearly 30% increase in traffic in 2014.

See? SEO is nothing to scoff at. Small changes can create large and lasting effects.

Zapier: SEO split-testing at a B2B SaaS company

It’s easy to see how Pinterest, with over 175 million active users, can grow rapidly with a few well-executed SEO optimizations. But how does this translate to a B2B context?

Let’s take a look at how Zapier relies on SEO.

Zapier builds automated workflows—a “glue” to connect 750+ web apps and take repetitive tasks off your hands. If you use Mailchimp and Trello, you can connect them to move data from one place to another automatically. Or connect Trello with Calendly, Calendly with Slack, etc.

Zapier’s site is, therefore, made of many, many webpages, each dedicated to individual apps and unique two-app combinations—over 500,000 pages, in fact.

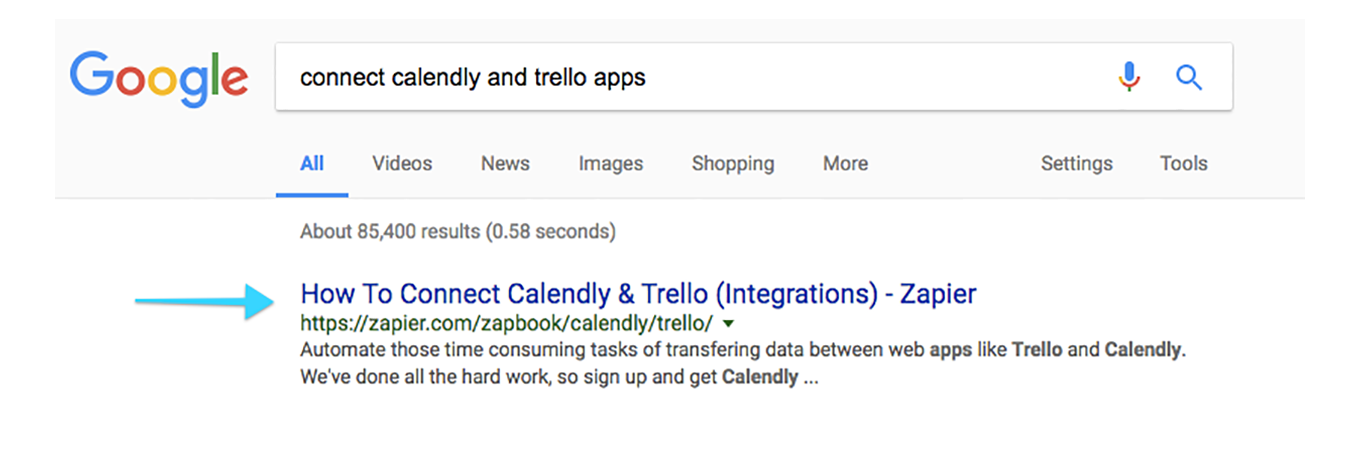

Imagine that someone who uses Trello and Calendly searches for a way to connect the two. Zapier wants their dedicated landing page for Trello and Calendly to be the top result.

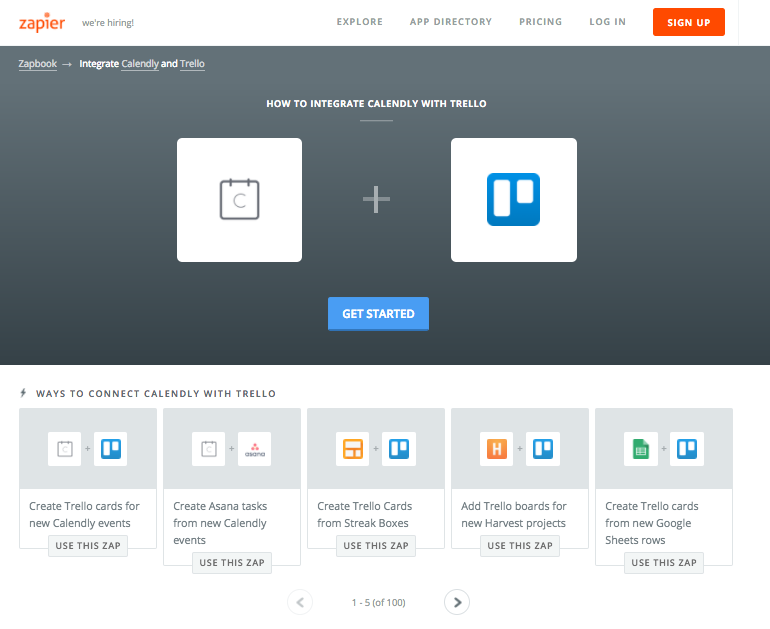

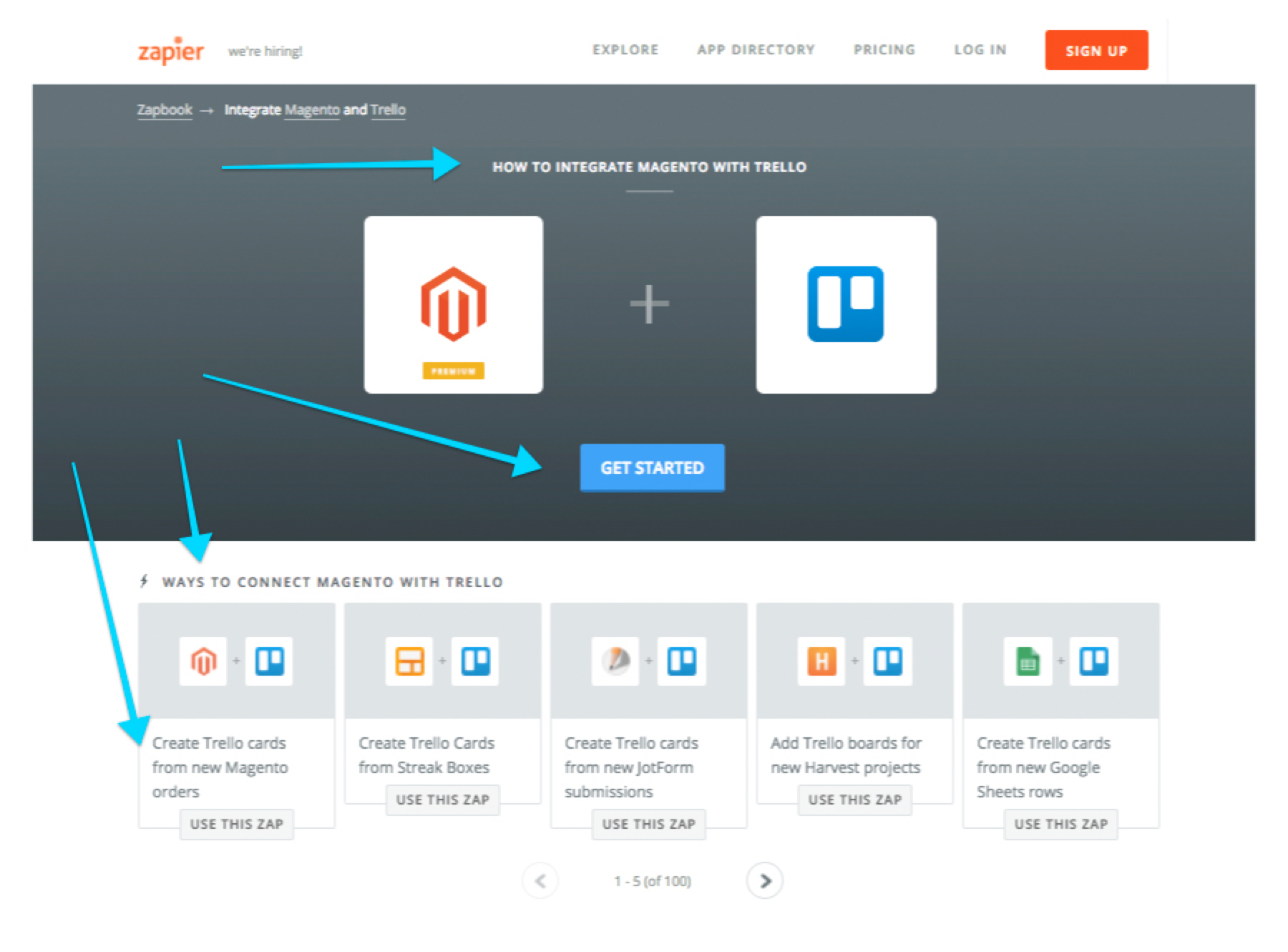

Clicking on that result leads to this page with instructions and tools.

Multiply this by endless app combinations. Organic search has been a long-lasting, cost-effective, critical growth channel for Zapier from the very start, because ranking high can be free with the right SEO efforts.

At first, Zapier performed really well in search, but as more competitors entered the market, growth began to plateau. They wanted to start experimenting with more SEO tactics, and as a data-driven company, they wanted to A/B test them before rolling out permanent changes. Instead of building an internal tool to support experimentation like Pinterest did, they came to work with my company, RankScience, where we’ve performed around 20 split tests over a year of collaboration.

Asad Zulfahri, a growth marketer at Zapier who is dedicated to SEO, explains why search remains such a core channel to this day. “With proper SEO, you don’t need as much money to expand rapidly; you don’t need to pay for PPC to grow. It’s one of the key elements of making Zapier profitable as a company,” he says. “For SEO, our goal is to more than double our growth.”

Before we show you some of Asad’s tips and tactics, here’s a quick primer on how SEO A/B testing works and how you can try it yourself.

The difference between A/B testing for SEO versus CRO

As we mentioned earlier, A/B testing for CRO hopes to get more people to convert while they’re on the page, while A/B testing for SEO hopes to drive more traffic to your page in the first place.

The types of changes you make for SEO should appeal to users in Google’s search results, as well as the Googlebot that determines your pages’ search rankings.

These two modes of testing involve one main structural difference. To split test for CRO, you split your site visitors (humans!) into two groups. Using a tool like Optimizely, VWO, Google Optimize, or your internal tool, you take all incoming visitor traffic and show half of them your original page, while you show the other half a variant that the tool imposes.

But to split test for SEO, we divide your webpages—not the audience—into two groups. We take a stack of the same type of page (such as all your /blog or /product pages) and implement the SEO change in one group but not the other.

We do this because we can’t simply duplicate one original webpage and send half of Google’s traffic to one version of your page and half to the other. Google doesn’t like seeing large amounts of duplicate content. And you can’t show different information to users than you show to Google— this could be considered cloaking (read more here). Not only that, but your original page might be much older and more established than your new variant, and therefore have better SEO ranking, so the comparison wouldn’t be anywhere near apples to apples.

Because of the way A/B testing for SEO is structured by splitting groups of test pages, it is better suited for large sites with many of the same page, to control for pre-existing, individual page variations within each group.

There is no hard-and-fast rule for how many pages you need. At RankScience, right now we’re recommending that you try this for groups of at least 100 similar pages (50 in each group). These might be 100 blog posts, 100 product pages, 100 review pages, 100 location pages, etc. (In Pinterest’s case, they were testing “board pages,” and in Zapier’s case, they’re testing product pages.) But of course, the number of pages required is always case by case. It depends on the site and existing search traffic—although 100 is a good rule of thumb.

What if my site doesn’t have hundreds of the same type of page? ... If you have a small website with less than hundreds of the same type of page and/or low search traffic, you might not be ready for A/B testing. Get started slowly with some SEO by making safe changes to your site without split-testing them.

- Make a change to one or a few pages that get reasonable traffic.

- Monitor immediate changes to detect dips in traffic, then wait a defined period of time—I recommend at least 28 days.

- Use Search Console to assess changes in impressions, clicks, and CTR before and after the changes.

- Iterate as needed and roll out successful changes to more pages.

Once your site grows, start trying some A/B testing.

To summarize the process:

- Pick an element for testing.

- Split your site pages into equal control and variant pages, and make the change on the pages in the variant group.

- See which group outperforms the other in terms of CTR and driving traffic to your site.

What should I be measuring?

Keep an eye on three metrics:

- Total number of clicks (total number of site visitors from the SERP) - How many people clicked on your search result?

- Total impressions - How many people are seeing your page on the SERP? (You’ll also want to look at click-through rates—if you’re getting more impressions but not more clicks, you may need to adjust your titles and meta tags to make them more enticing.)

- Average SERP ranking - This is the average ranking of the pages in one group on Google.

How many tests can I do at once?

Only one A/B test at a time should be done on a given set of pages, to isolate the adjustment. So if you’ve only got one stack of pages to split-test, make sure to sequence tests one after the other.

But you can run two or more tests at the same time on different areas (groups of pages) of your website if you segment pages carefully. At Zapier, the URL structure of the site lends itself well to segmentation because we can test all pages that have the same URL path. For instance, it would be easy to segment out and A/B test only and all pages that are:

- Zapbook pages with one service:

zapier.com/zapbook/(service1) - Zapbook pages with two services.

zapier.com/apps/(service1)/integrations/(service2) - Zap Template pages (a Zap Template is a blueprint for a repeated task):

zapier.com/zapbook/zaps/(number)/(zap)

For how long should I run an A/B test?

At RankScience, we run experiments for 21 to 28 days to get the full dataset, but there is no hard rule because sites have such different volumes of search traffic. Traffic is the fuel for A/B tests. The more fuel you have at your disposal, the faster you can conclude experiments because they’ll reach statistical significance.

This is why it’s quicker to A/B test changes to website pages that have already been up and running for a while. They’re ranked on Google already, and you’ve measured steady traffic coming their way. Contrast this to A/B testing brand new pages that are still being developed and launched: they’ll need time to attract significant, stable traffic.

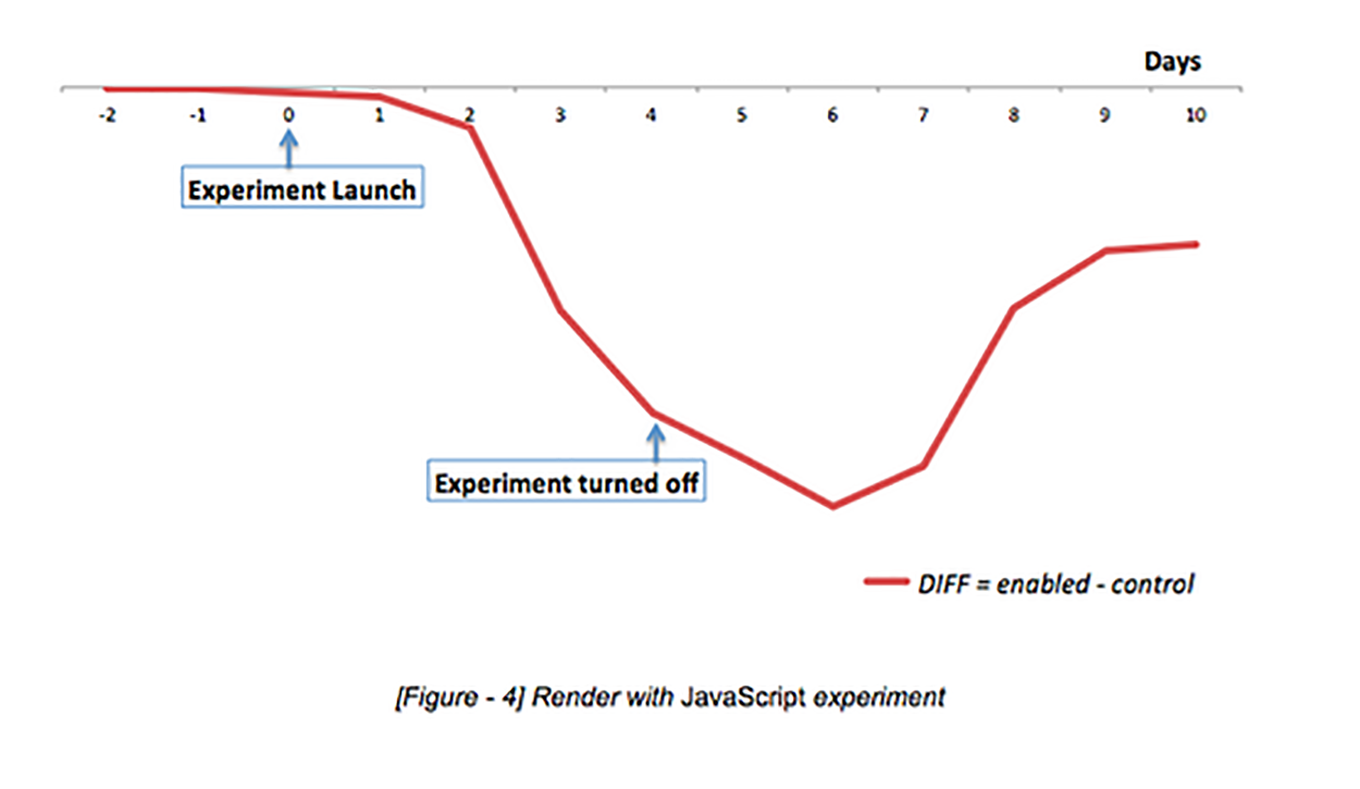

In Pinterest’s case—consider them a high-traffic site—they saw initial results from a change after a just couple of days. Then the variant group would creep further away from the control group until it stabilized after one or two weeks. In one test rendering with Javascript, it took them just two days to see a disastrous dip. They turned the experiment off after four days, and it started to tick back up on day six.

Tips for better SEO split testing:

- Before making an SEO change, set expectations with your team that you’re running experiments, and site traffic may be rocky in the next few days or weeks. It may be a good idea to prepare other marketing channels (like paid SEM, social, etc.) to step in and compensate for temporary losses in traffic if need be, so your overall business doesn’t take a hit.

- Make sure to regularly update your sitemap and prune unnecessary pages from the index. This preserves Google’s crawl budget for necessary pages.

Tools to help you

There are three paths you can take when you try SEO split-testing. We’ll show you the DIY version, then we’ll talk about building or buying experimentation tools that make the process more accurate and hands-free.

DIY

If you want to split-test without the help of testing software, you can manually group the pages yourself and watch their traffic numbers change in Google Search Console.

- Divide site pages into two groups: control and variant.

- Make a change to the variant group.

- Wait 21–28 days while monitoring clicks, impressions, and CTR with Search Console.

- Collect results: Go into Search Console and filter for your control group pages. Determine their average CTR during the time period since you made the SEO change by filtering for the last 21–28 days before and after you made the change (Search Console lets you compare date ranges). Now, you have the before-and-after average CTR for your control group.

- Do the same for your variant group pages.

- Compare the variant and control CTRs and determine the largest improvement. The pages with the highest CTR improvements are the winners, and you should be able to determine whether the SEO change was beneficial or not!

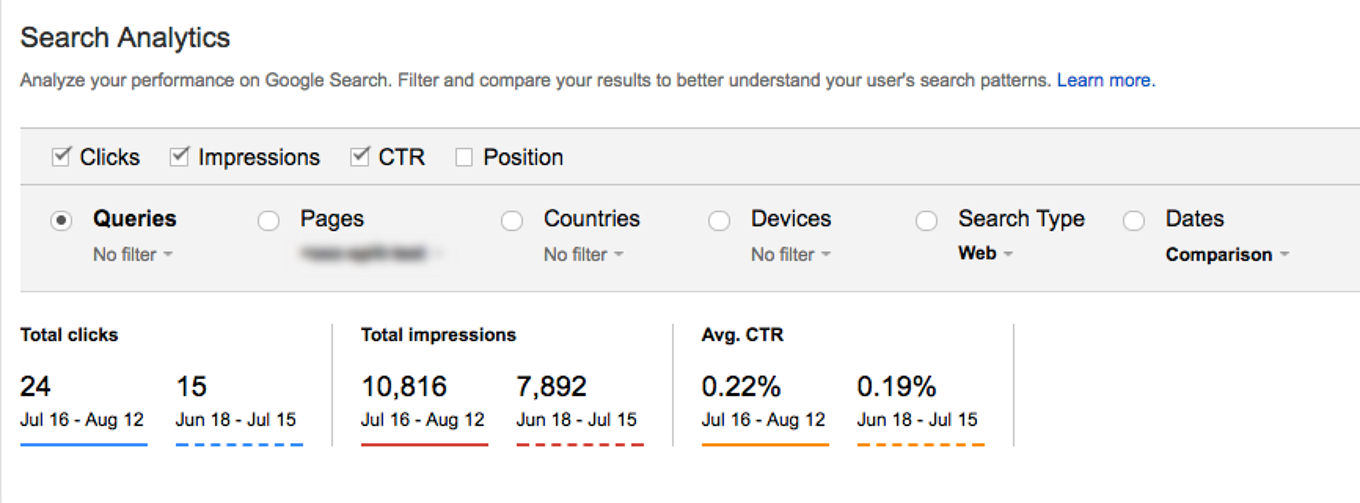

Here’s the stats page in Google Search Console, filtered for one group of pages. Check the boxes for Clicks, Impressions, and CTR, and filter to compare the date ranges before and after the test.

Build your own tool

Some companies choose to make the investment in building their own A/B testing tools to more easily and accurately read results. The one Pinterest built was centered around the key metric of traffic, as measured by the number of unique sessions referred by search engines to Pinterest pages. It compared the averages of the difference in traffic between the two groups before and after the experiment launch.

To put the tool together, they built and connected three components:

- Configuration to define experiments and group ranges

- Daily data job to compute traffic to the pages in each experiment group

- Dashboard to view results

Read more about their experimentation framework on Julie’s post.

Use a third-party tool

You can also use third-party tools if you don’t want to build an internal one. RankScience is one such example of a tool. It is a CDN that sits downstream from your CDN and upstream from your origin web server. It automates SEO changes, tweaking your HTML and running experiments for 21–28 days before calculating the results for you.

Asad explains why Zapier went the third-party route: “In-house SEOs typically lack the resources to do proper A/B testing because they usually have to rely on development teams, which have other priorities. With a third-party tool, those of us on the marketing team can try experiments without going through all the steps with other teams.”

Reminder:

Regardless of which option you choose, you need to have basic SEO tools in place. Enable Google Analytics and Google Search Console (formerly called Google Webmaster Tools) as soon as you can to start collecting data.

So, what should I test?

There’s one important truth about SEO: There’s no one list of changes I could recommend that you make to your site. It’s really not one-size-fits-all. The test ideas are endless. Some unique, unassuming tactics may end up working like a charm for you, while others will actually hurt your traffic. (This is why A/B testing is so helpful.)

As you start searching for test ideas, you’ll see hundreds of SEO site audit guides on Google—see this technical SEO checklist, for example. While these lists are a great starting point, we recommend digging deeper. Anything can be worth a try!

Start by reflecting on high-level questions such as:

What are your SEO goals? Do you want to grow traffic for your whole site? Or, do you want to focus on growing traffic for a few pages that represent important verticals or products?

Will you optimize existing pages that already have traffic, or will you start from scratch with new pages? Check how much search traffic volume you’re already receiving. The less traffic you have, the longer you’ll have to run tests.

What does your available search data tell you about searcher intent? Take a look at Google Analytics and Search Console to learn where your existing search traffic is.

What engineering resources are available? This may limit you to testing site changes you’re able to implement on your own.

Tips for sustainable growth:

- When mapping out a strategy for data-driven SEO, the most important thing to keep in mind is building toward sustainable growth. It’s easy to build traffic with SEO tactics that are not quite “Google-legal.” These are called black-hat SEO tactics (see examples) and the penalties for using them are steep, like getting excluded from search engine results.

- There are also grey-hat techniques, which are “technically” legal, but ethically questionable, like using fake reviews or incorrect schemas. These can get you a manual penalty. It’s not worth it!

My first three things to test

Alright, even though I insist SEO isn’t one-size-fits-all, I do have a “first three” checklist that fixes common mistakes many B2B SaaS companies make.

1. Title Tags

Best practice: Put important keywords first in title tags.

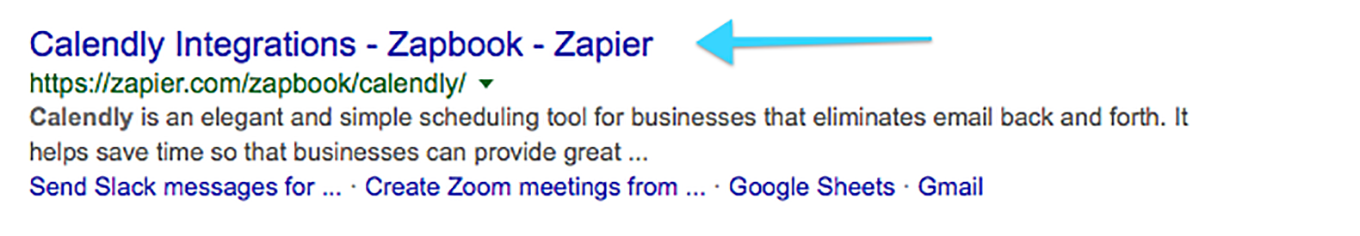

Title tags become a page’s clickable headline in search engine results, and are critically important for usability, SEO, and social sharing.

I often see B2B SaaS folks put their company name at the beginning of a title tag, which typically isn’t the best choice for SEO. If you’ve done this, switch them around.

- Common mistake: [company name] - [keyword-filled description]

- Better: [keyword-filled description] - [company name]

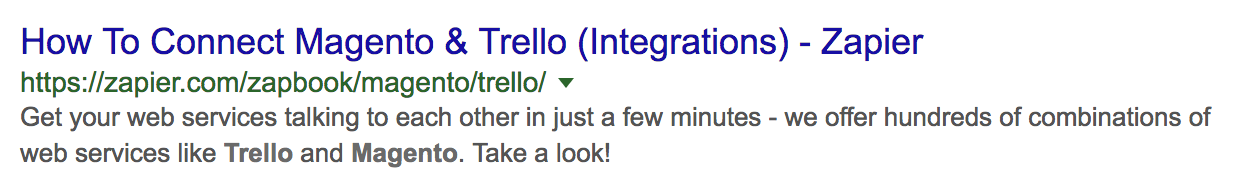

In the example above, Zapier has it the better way:

- Common mistake: [Zapbook - Zapier] - [Calendly Integrations]

- Better: [Calendly Integrations] - [Zapbook - Zapier]

2. Meta Descriptions

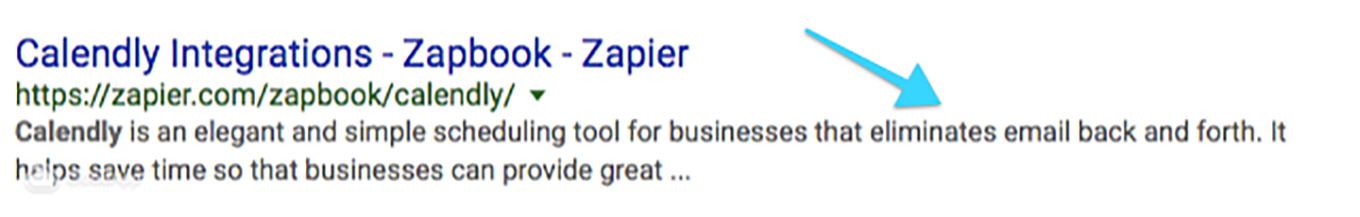

Best practice: Make your meta descriptions enticing to click on!

Meta descriptions are the descriptive texts in search engine results, and together with title tags they contribute to the first impression you make on a potential customer.

I see a lot of B2B SaaS companies writing metas with fluffy branding language. Instead, focus your meta descriptions around your unique value propositions (UVPs): what a user can get from you.

In the example above, Zapier highlights Calendly’s value of eliminating email back and forth and helping businesses save time. You can test a few different UVPs to see what sticks.

Make your metas count!

3. H1 Headers

The H1 header appears on a webpage after you click a search result. This is typically the most-emphasized, highest-level title or heading for the page, like a headline for a newspaper article. On the RankScience homepage, ours says “Grow your website traffic with A/B testing.”

Make sure you have important keywords in it. I often see companies sacrifice H1 headers for the sake of site design, either removing them or having non-optimized text. But we recommend that H1 tags be built into the CSS of the page using best practices.

What else can I test?

Once you’ve tried these fundamental SEO changes, get creative as you come up with your own.

Here are just a few areas of a page that Zapier tests.

Asad’s favorite advice for finding great ideas? Talk to customers. “SEO works so hard to please the Google bot, but in reality, there are actual people using your site,” he says. “So my tip is to talk to customers, get their stories, and then use their actual language. That’s the most effective content to engage them.” And if he is browsing another company’s site and sees a feature he likes, he might test that too.

Do I need to test *everything* before rolling it out sitewide?

Nope, no need. Here’s Asad’s approach: “We always A/B test large-scale things, like the meta descriptions across all our 500,000 pages—and we’ll get quick results because of how many pages we have. And if we’re changing anything facing the search engine results page (SERP), like the title tags and descriptions, that’ll impact click-through rates, so we’ll test it no matter what.”

“But if there’s something very small and low risk, we’ll just follow best practices and implement it globally—like tweaking alt text on images or adding links to the site footer.”

Test the unexpected

You can test just about anything—even small UX changes!

RankScience co-founder Dillon Forest was mapping out some potential changes and experiments for Zapier when he noticed that some of their site text was small and hard to read. If he was having a hard time seeing it, he figured potential site visitors may have the same issue. Here was the text at 12-point font.

He made the font a little bigger, 16 point, and A/B tested it.

This actually turned into an SEO win! After 28 days, we saw increased impressions and clicks to those pages. Our theory? The increased font size led to more clicks, which led to a higher SERP position, which drove more traffic to the site. The win has continued to stand to this day.

So far, we’ve talked about what SEO A/B testing is, the tools you need to do it, and a few tests you can try. There’s one last thing to consider that might come up as you start doing more SEO testing at your company: the need to balance it with branding.

Balancing branding and SEO

Every now and then, I see a tug-of-war between a company’s branding choices and their SEO changes, where one compromises the other.

Here’s an example. Asad once added the word “sync” to URL meta description tags, as in “sync Slack with Trello.” He was excited to see a strong, positive test result and brought it to the rest of the marketing team, but learned that “sync” is actually against Zapier brand guidelines.

Technically, Zapier is a service that moves data to other services, it but doesn’t exactly sync the two. So they couldn’t use that idea, even though it provided a temporary uplift on click-through rate. (And anyway, users would probably feel misled when they eventually figure out there’s no real syncing. That would hurt Zapier’s brand and ranking in the long run.)

Here’s a different example from an early title tag test for the Zapier app directory pages.

The Zapier app directory is called the “Zapbook,” a branded word, and their branding tagline is “Better Together.” Zapier used these two phrases in their title tags.

For instance, in a landing page for the integration of Trello (service1) with Magento (service2), the title tag read:

- Original title: Magento + Trello: Better Together - Zapbook - Zapier

- Sitewide convention: https://zapier.com/zapbook/(service1)/(service2) (service1) + (service2): Better Together - Zapbook - Zapier

But we wondered whether those phrases carried enough meaning for users. While “Zapbook” was on brand, we saw it as a weak keyword taking up valuable real estate. “Better Together” makes sense from a branding perspective, but provides little insight into what Zapier actually does. We tried a new title tag that focused more on utility.

- New title: Connect Magento to Trello Integration (Tutorial)

- Sitewide convention: Connect (service1) to (service2) Integration (Tutorial) - Zapier

We chose this title because “integration” is a strong descriptive keyword. “Tutorial” was an experiment—we often test descriptive keywords like “tutorial,” “examples,” and “lessons” when appropriate.

This showed a 5% uplift in CTR from the original.

So we continued to iterate. Search Console showed us that a lot of site visitors were searching with the words “how to connect,” so we tried to match their natural language for our next test.

- Newer title: How to connect Magento to Trello (Integrations) - Zapier

- Sitewide convention: How to connect (service1) to (service2) (Integrations) - Zapier

This test showed a 17% CTR uplift!

This is all to say that SEO changes sometimes require a deprioritization or shift in branding, and vice versa. We don’t have a clear answer for whether to prioritize branding or SEO—that’s a decision that each company/marketer needs to make—but at least when you’re data-driven about it, you can see what the upside would be. In Zapier’s case, for their title tags, the 17% uplift was worth it, but in the case of “sync,” it wasn’t.

Off to the races!

We hope you’re leaving with an understanding of A/B testing for SEO and a few ideas for how to get started. Little efforts in SEO can sometimes yield big results, and experimentation makes the relationship between the two much more measurable.

“The impact of an SEO A/B testing framework at Zapier has been very positive. Just the time saved alone is great, where we don’t have to wait for engineering on our end,” Asad says. “I think that every company should have this kind of resource.”

Give it a shot—we wish you luck!