What I wished I'd known when building our attribution model

Here at Clearbit, we've built a powerful attribution model that provides a single view of all our customers and their journeys. The model captures lead and customer behaviors across all of Clearbit's marketing channels and products, combining them into a single source of truth that lets us:

- Measure the revenue impact of marketing campaigns.

- Score leads on intent/engagement.

- See the details of each individual lead or customer's journey with Clearbit, right down to their converting touchpoints.

- Personalize and hyper-target emails, ad campaigns, and sales conversations based on a lead's actions.

- Flag leads as PQLs or MQLs, and identify whether or not they're in our ideal customer profile.

- See which customers are lagging in product usage so CS can reach out to them.

Best of all, this information is operationalized instead of just being locked up in our data warehouse. We push data back out to everyday sales and communications tools — so salespeople and marketers don't need to write a bunch of SQL to see it and act on it.

But, building an attribution model didn't come easy. Along the way, we encountered tradeoffs, course corrections, and mindset shifts — and today, I'm going to share some things I wish I'd known while designing and building this attribution model.

Tips for building an attribution model

Before we get into how we built our attribution model at Clearbit. Here are 3 tips for building an attribution model that everyone should consider before getting started.

1. Collaboration is key

Building an attribution model is a team effort. Meet regularly with stakeholders to better understand their needs.

2. Start small, find what works for you, then iterate

Don’t worry about trying to attribute every single user event. Focus on the important events first then expand as you learn.

3. Make it accessible

There’s no point in building an attribution model if you’re not going to use it. Make sure your attribution model is accessible from all the right tools at the right time. (We go into this more later on.)

Clearbit's attribution model, in a nutshell

You can find more detail about the model's data architecture here, but to save you a click, here's a high-level overview.

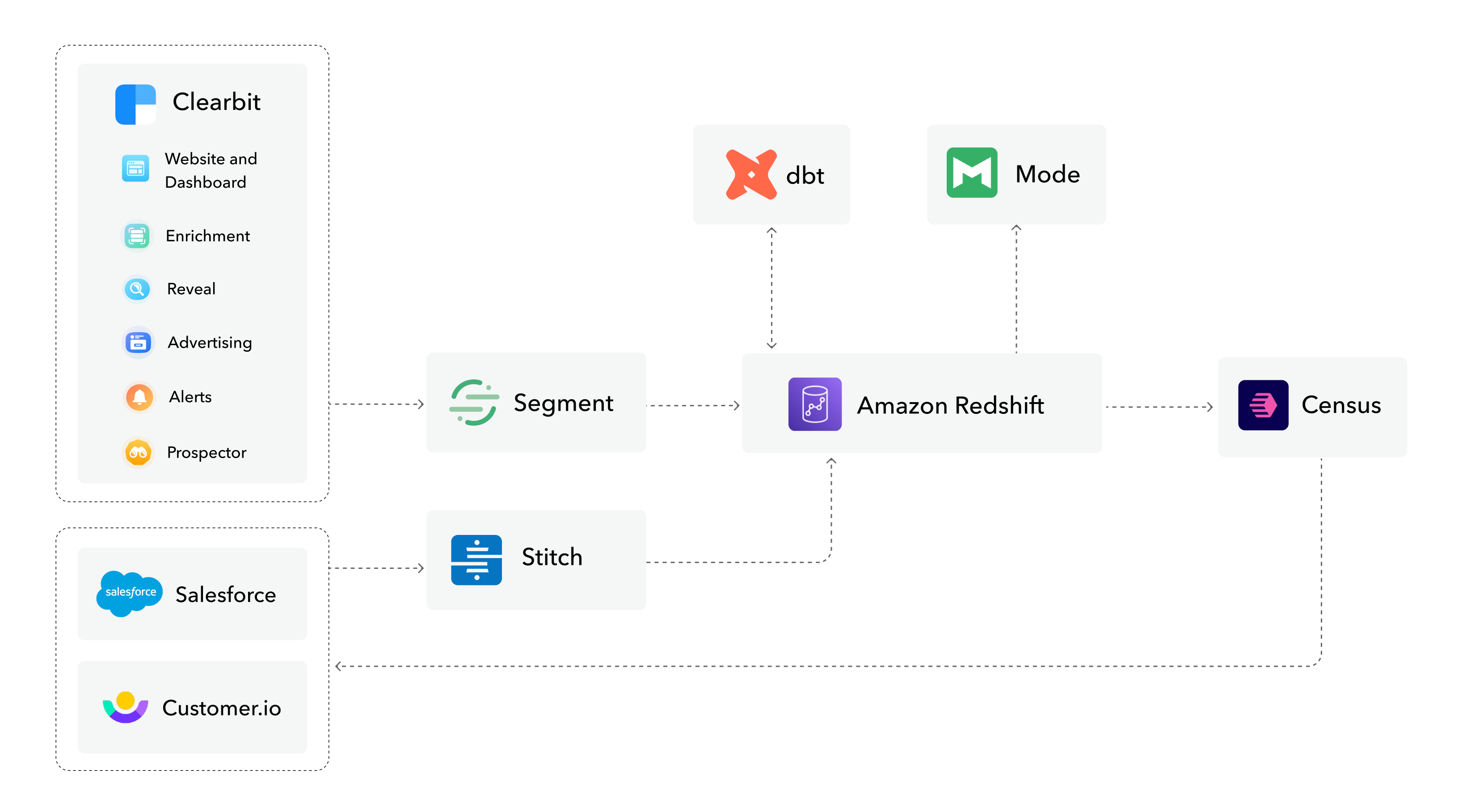

The tech stack centers around our data warehouse, Redshift. We use Segment and Stitch to capture data across channels and send it to Redshift. dbt sits on top of Redshift and transforms the data into simple master tables — our single source for attribution, our intent scoring, and more.

Finally, we send data to other tools with Census, which is a reverse ETL; we use it for a bit of last-minute data formatting before syncing into Salesforce and Customer.io (our email platform). Any Clearbit teammate can also use Mode to query the data and explore visualizations, even if they don't have a technical background.

Since we've stitched together a number of tools to make a custom platform, the system is quite flexible. Today, the model combines information from more than 10 sources across Clearbit products, webpages, and marketing channels — but it can potentially capture data from pretty much any channel, in any way we want it.

It's powerful, it's customized ... and it might be more than we really need today in terms of Clearbit's company maturity. We have the keys to a Ferrari, even if our company is still at the stage when a Prius would do just fine. But one day soon, we'll have higher lead volume, more sales and marketing complexity, and we're going to need all that Italian engineering.

Because of today's technology for data teams, there are very few things we can't do. The combination of Segment, Census, and dbt can get us any data we need in any way we want to see it. But it doesn't matter how cool a model is if your team isn't ready for it — it'll sit on the sidelines.

"It doesn't matter how cool a model is if your team isn't ready for it — it'll sit on the sidelines."

"It doesn't matter how cool a model is if your team isn't ready for it — it'll sit on the sidelines."- Julie Beynon, Head of Analytics at Clearbit

And that leads us to our first major learning: to start small. For example, at first, it was tempting to make our engagement scoring really complex — if you've got a Ferrari, you want to drive it fast — but I saw that we needed to whittle it down.

If I were to go back and do it again, I'd build for where our team was at. We even have a file for our engagement scoring that says 'Take 3: Back to Basics', because Takes 1 and 2 were a hundred pages long. A simple yes/no, good/bad score with a few data points and interactions is a great (and recommended) place to start.

we've already implemented the next iteration: take 4!

we've already implemented the next iteration: take 4!The same thing happened with our attribution tracking…

1. Don't make all data points available in all tools at once

I tried to boil the ocean initially to get our data "actionable", because one of the benefits to dbt is that it lets you create access to and build models with data that would otherwise be siloed away. So I'd wanted to take all of the work that we'd done on the reporting side and make it instantly available in tools like Customer.io and Salesforce for everyone.

But at first ,we overdid it in making every user event available. My teammates were overwhelmed by all that data and they just didn't know how to use it. We also lacked proper documentation and clarity around definitions.

In truth, our marketing team needed only a handful of data points to pull off the strategies they had in mind. So I discussed their planned campaigns with them, to figure out precisely what it would take to make them more valuable. We did a big cleanup and distilled 1,000 data points down to just 30.

Our default dataset is now leaner, but if a marketing teammate needs to track a data point that's not included, that's no problem — an analyst can easily write a simple line of code for a new field. This process is no more than a 15-minute job, 30 minutes with QA. So, we learned to make a tradeoff that gave us a more usable model, in exchange for less default coverage — but with the ability to easily add more data points as needed.

My advice, if you're in the same boat? Start by having conversations with teammates and discuss how the data will be applied in campaigns.

2. Don't try to make all data available in real time

If the first lesson was to simplify the number of data points, the second was to simplify data refreshes.

When we started building an attribution model, we wanted the data we sent to our tools to be as fresh as possible, especially in our email tool, Customer.io. Up-to-date data lets us trigger perfectly personalized emails as soon as a user takes an important action, like an account signup.

So at first, we refreshed data in dbt every 15 minutes. But this overloaded — and eventually broke — the system.

We fixed the problem with a few simple steps:

- We reduced the frequency of dbt builds from 15 minutes to four hours.

- We prioritized and fast-tracked only a tiny slice of data points that we needed in real time for personalized emails. For example, when a new user signs up for Clearbit, we want our first email to incorporate important attributes or segments, like job title, first name, or industry. We move these priority data points directly from Segment to Census and then into Customer.io, temporarily skipping dbt so they're immediately available. The rest of the data can wait for the next dbt build.

- When people query the database, we now give priority to ad hoc queries over pre-scheduled queries. That way, people get quicker answers to their questions instead of having to wait for everything to load.

- We audited our code and did a little cleanup and table optimization to minimize expensive queries.

Communication is at the heart of this lesson, too. Analysts and marketing teammates should talk to each other to prioritize the data points and identify which ones they truly need in real time. That way, they can pull off the campaigns of their dreams and the system can run quickly.

3. Our setup puts the analyst in the hot seat

Our model seriously levels up our ability to do data-driven communications like automated emails and personalization at scale. But this approach also raised the stakes for our model's accuracy and performance — and increased the cost of bad data.

When data is incorrect or missing, it doesn't just make the numbers in a Mode report look wonky, it affects the end user. Bad data can cause email misfires and hurt the entire communication chain, making the Clearbit experience disjointed — if not surreal — for our living, breathing customers.

And who gets a phone call when the personalization in a bunch of emails goes pear-shaped? That's right: the ops/analytics folks. The model puts us in the critical path of customer communication. When something breaks, we buckle up our toolbelt.

Because of this extremely direct tie to the customer's inbox, the analyst role is fundamentally different at Clearbit than at other companies. We needed to shift our mindset around this. More testing and collaborative QA is also needed, so we use dbt tests and work closely with the marketing team whenever they request a new data point, especially ones that get flow into communication tools. I need to double check that the numbers make sense before pushing a new model or report live.

A new dynamic between ops and marketing teams

Our analyst/ops team now has a direct influence on our end-user experience. We're a multiplier for other teammates at Clearbit, enabling everyone to create their own campaigns, run their own reports, and become data-driven marketers.

Even though we've built a model that can technically handle all data in our marketing universe, we've learned to prioritize, to keep our system nimble and usable. We chose to strip back unnecessary data points and focus on the most important subset, even if it takes a little extra work to add requested events back in. And we were even more choosy when we prioritized an even smaller dataset to update in real-time for emails.

But the biggest lesson we learned when building an attribution model was all about collaboration and discussion. There's no way a team can effectively prioritize unless analysts work closely with marketers and salespeople — those people who will ultimately use the data. Today, we dream up campaigns together, then work backward to get the data we need to pull it off.

- Dive into our comprehensive marketing attribution book to learn how she built the attribution model that provides a single view of our customers.

- Catch her earlier chat with dbt on lessons learned from building the model

- Or, learn her thoughts on building a PQL model in Developing a PQL: The tools and techniques you need to accomplish it